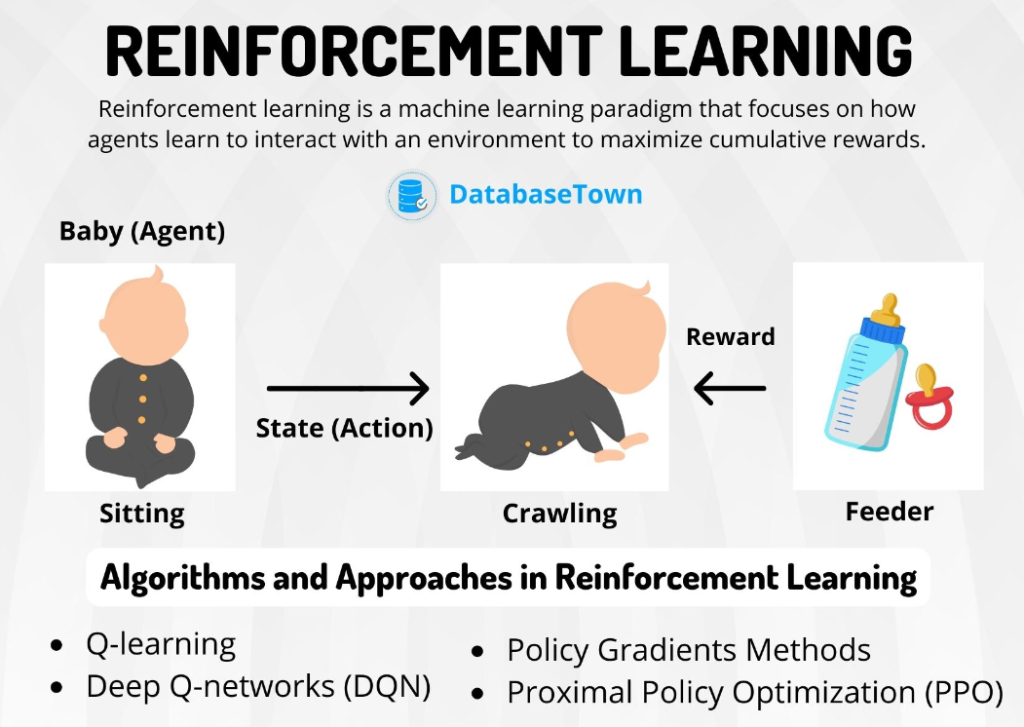

Reinforcement Learning (RL) is a branch of machine learning where an agent learns to make decisions by interacting with an environment. Unlike supervised and unsupervised learning, RL agents don’t learn from labeled data. Instead, they learn by trial and error, maximizing a reward signal.

Key Components of Reinforcement Learning

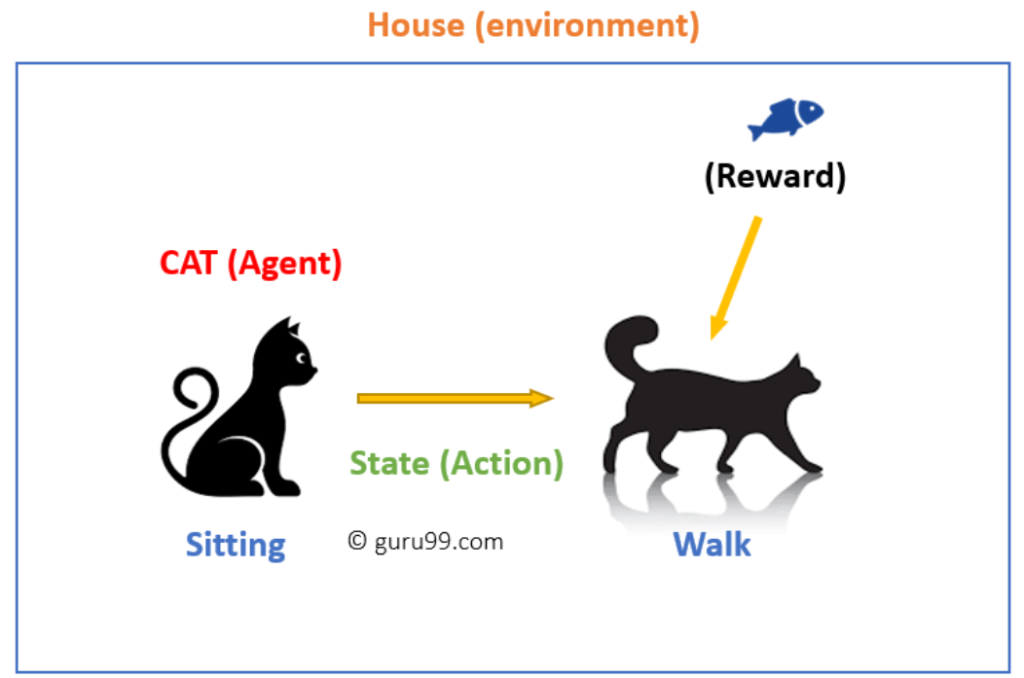

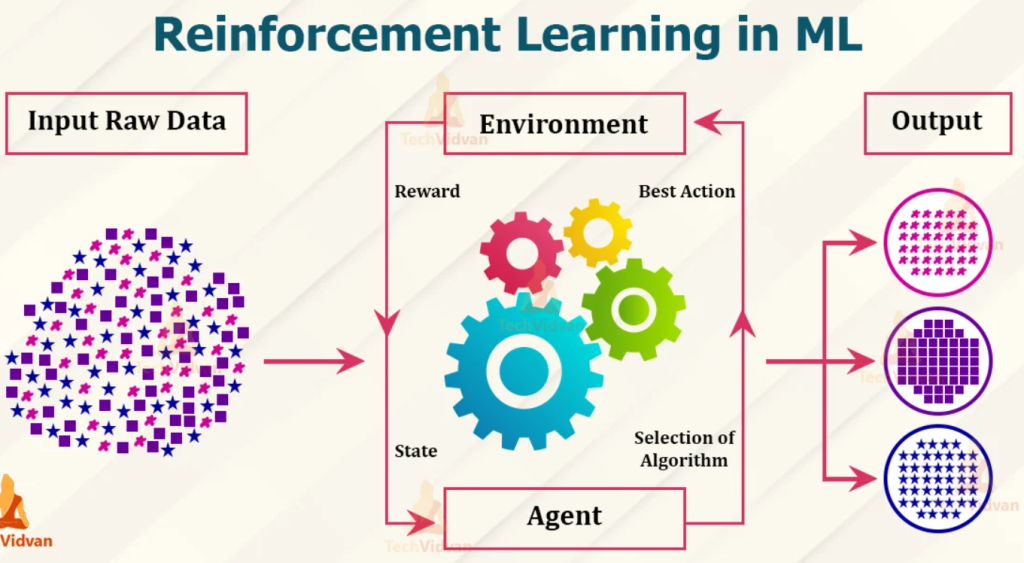

- Agent: The decision-maker who learns to interact with the environment.

- Environment: The world in which the agent operates.

- State: The current situation or condition of the environment.

- Action: The choices available to the agent in a given state.

- Reward: A feedback signal indicating the success of an action.

- Policy: A strategy that maps states to actions.

- Value function: Predicts the expected future reward from a given state.

The Reinforcement Learning Process

- Initialization: The agent starts in an initial state.

- Action Selection: The agent chooses an action based on its current policy.

- Environment Interaction: The agent takes the action, and the environment transitions to a new state and provides a reward.

- Learning: The agent updates its policy or value function based on the received reward.

- Repeat: The process continues iteratively.

Exploration vs. Exploitation

A crucial aspect of RL is balancing exploration and exploitation.

- Exploration: The agent tries new actions to discover better options.

- Exploitation: The agent sticks to actions that have yielded good rewards in the past.

Reinforcement Learning Algorithms

Several algorithms have been developed for reinforcement learning:

- Q-Learning: Learns the optimal action-value function (Q-value) for each state-action pair.

- Deep Q-Networks (DQN): Combines Q-learning with deep neural networks for handling complex state spaces.

- Policy Gradient Methods: Directly optimize the policy to maximize expected rewards.

- Actor-Critic Methods: Combine the strengths of policy-based and value-based methods.

Challenges and Considerations

- Exploration-exploitation dilemma: Balancing the need to explore new actions with exploiting known good actions.

- Sparse rewards: Learning can be difficult when rewards are infrequent or delayed.

- Credit assignment problem: Determining which actions contributed to a reward can be challenging.

- Sample inefficiency: RL agents often require a large number of interactions with the environment to learn effectively.

Applications of Reinforcement Learning

- Robotics: Controlling robots to perform tasks in complex environments.

- Game playing: Mastering games like chess, Go, and video games.

- Finance: Algorithmic trading and portfolio management.

- Healthcare: Optimizing treatment plans and drug discovery.

- Recommendation systems: Personalizing recommendations based on user preferences.

Conclusion

Reinforcement learning is a powerful technique for solving complex decision-making problems. By learning from interactions with the environment, agents can develop optimal policies to achieve desired goals. While it presents challenges, the potential benefits and applications of RL are vast and continue to expand.