Here’s a comprehensive post titled “Best Practices for Deploying Microservices with Kubernetes” with expanded content for each section:

The Role of Kubernetes in Microservices Deployment

Kubernetes has become the go-to platform for orchestrating the deployment, scaling, and management of containerized applications, especially in microservices architectures. By managing the lifecycle of containers and ensuring their availability, Kubernetes enables DevOps teams to deploy, scale, and monitor microservices with greater efficiency and reliability. In this post, we will explore the best practices for deploying microservices with Kubernetes, focusing on how to optimize your deployments, ensure security, and improve scalability and performance.

Key Features of Kubernetes for Microservices Deployment

- Container Orchestration: Kubernetes automates container management, ensuring that microservices are deployed consistently across different environments.

- Scaling and Load Balancing: Kubernetes can automatically scale microservices based on demand and manage load balancing to ensure efficient resource utilization.

- Fault Tolerance and Self-Healing: Kubernetes provides built-in mechanisms for handling failures, ensuring that microservices are always available and functioning as expected.

- Service Discovery: Kubernetes provides service discovery, allowing microservices to automatically find and communicate with each other without manual configuration.

- Declarative Configuration: Kubernetes uses declarative configuration files to define the desired state of the system, ensuring consistency and repeatability in deployments.

1. Containerizing Microservices for Kubernetes

Before deploying microservices to Kubernetes, they need to be packaged into containers. Containers allow for consistency across environments and provide isolation for each service, making them ideal for microservices. Using Docker to containerize microservices is a common approach, but Kubernetes can work with any container runtime.

Best Practices for Containerizing Microservices

- Use Lightweight Base Images: Choose minimal base images, such as Alpine Linux, to reduce the size of the containers and minimize the attack surface.

- Separation of Concerns: Each microservice should be containerized in its own container, ensuring that each service is isolated and can be managed independently.

- Environment Variables for Configuration: Store configuration settings in environment variables, allowing microservices to be easily reconfigured without modifying the container image.

- Container Image Versioning: Use version tags for container images to track and manage versions of microservices more effectively. Avoid using the “latest” tag to ensure stability across deployments.

- Minimize Dependencies: Ensure that the container only includes the necessary libraries and dependencies to run the service. This reduces complexity and improves performance.

2. Automating Deployments with Kubernetes Deployments and Helm

Kubernetes provides several tools to automate the deployment of microservices, making it easier to manage large-scale applications. Kubernetes Deployments and Helm charts are commonly used for automating deployment processes.

Best Practices for Automating Deployments

- Use Kubernetes Deployments: Define your microservices in Kubernetes Deployment resources, which ensure that your microservices are always running as specified and allow for easy scaling and rolling updates.

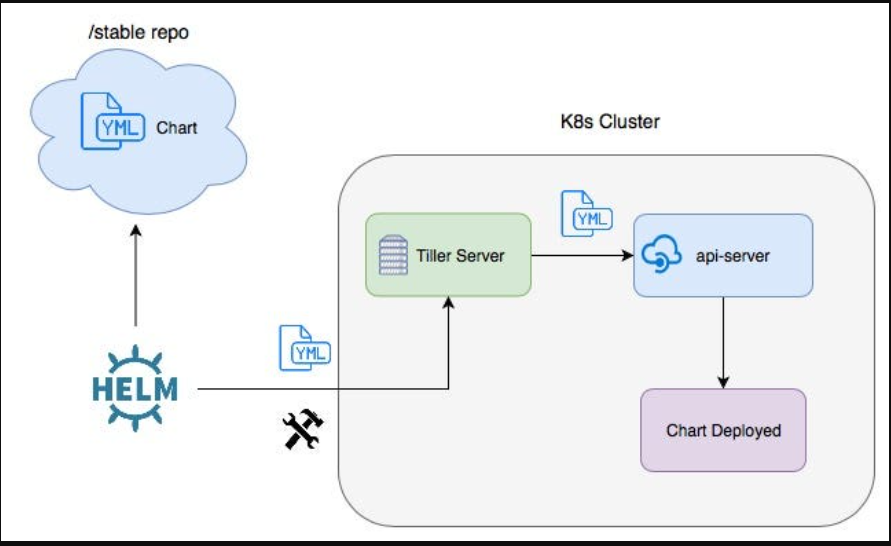

- Helm for Package Management: Use Helm to manage Kubernetes applications. Helm allows you to package your microservices as Helm charts, making deployments easier and more repeatable.

- Rolling Updates and Rollbacks: Leverage Kubernetes’ rolling updates feature to deploy new versions of your microservices without downtime. If something goes wrong, you can quickly roll back to a previous stable version.

- Automate CI/CD Pipelines: Integrate Kubernetes with your CI/CD pipelines to automatically trigger deployments when new code is pushed. Tools like Jenkins, GitLab CI, and ArgoCD can help automate this process.

3. Ensuring Scalability with Kubernetes

One of the key benefits of using Kubernetes for deploying microservices is its ability to scale applications based on demand. Kubernetes offers horizontal scaling, which allows you to scale microservices independently, ensuring that resources are efficiently used.

Best Practices for Ensuring Scalability

- Horizontal Pod Autoscaling: Use Kubernetes’ Horizontal Pod Autoscaler (HPA) to automatically scale the number of pods for your microservices based on CPU and memory usage or custom metrics.

- Use Resource Requests and Limits: Define CPU and memory requests and limits for each microservice to ensure that Kubernetes schedules resources efficiently and avoids resource contention.

- Cluster Autoscaling: Enable cluster autoscaling to automatically adjust the number of nodes in the Kubernetes cluster based on resource demand. This ensures that your cluster can handle increasing workloads without manual intervention.

- Load Balancing: Use Kubernetes Services with load balancing to distribute traffic evenly across pods, ensuring that each microservice receives the appropriate amount of traffic and preventing any single pod from being overwhelmed.

- Stateless Microservices: Ensure that your microservices are stateless, so they can be scaled up and down easily without relying on local storage or session data.

4. Managing Service Discovery and Communication in Microservices

Microservices often need to communicate with each other, and Kubernetes provides several tools for managing service discovery and communication. Service discovery ensures that microservices can find and interact with each other without needing to hardcode IP addresses or DNS names.

Best Practices for Service Discovery and Communication

- Use Kubernetes Services for Discovery: Kubernetes Services provide stable endpoints (DNS names) for accessing microservices, ensuring that other services can discover and communicate with them.

- Internal vs External Services: Use ClusterIP for internal communication between microservices and LoadBalancer or NodePort for exposing services to external traffic.

- Service Meshes: Implement a service mesh like Istio or Linkerd for more advanced service-to-service communication, including traffic management, observability, and security.

- Define Clear API Contracts: Ensure that each microservice exposes a clear API contract that other services can consume. Use API gateways or API management tools to handle incoming traffic and route requests to the correct microservices.

5. Securing Microservices on Kubernetes

Security is a critical aspect of deploying microservices, especially as the number of services and their interactions grow. Kubernetes provides several tools for securing microservices, but implementing a secure deployment requires adherence to best practices.

Best Practices for Securing Microservices

- Use Network Policies: Implement Kubernetes Network Policies to control traffic flow between microservices and ensure that only authorized services can communicate with each other.

- Role-Based Access Control (RBAC): Use RBAC to define who can access which resources within Kubernetes, ensuring that only authorized users or services can interact with sensitive microservices or data.

- Secure Container Images: Regularly scan your container images for vulnerabilities using tools like Clair, Anchore, or Trivy to ensure that the images you use are free of known security issues.

- Encrypt Secrets and Configurations: Use Kubernetes Secrets to store sensitive information like API keys and passwords securely, and enable encryption at rest to protect sensitive data.

- Service-to-Service Authentication: Implement mutual TLS (mTLS) for secure communication between microservices, ensuring that each service can authenticate the other before allowing communication.

6. Monitoring and Observability in Kubernetes

Effective monitoring and observability are essential for maintaining healthy microservices and identifying issues before they affect users. Kubernetes offers several tools for collecting metrics, logs, and traces to help DevOps teams monitor microservices performance.

Best Practices for Monitoring and Observability

- Use Kubernetes Metrics Server: The Metrics Server collects resource usage data from Kubernetes clusters and provides insights into CPU, memory, and other resource utilization for your microservices.

- Centralized Logging: Implement a centralized logging solution, such as ELK Stack (Elasticsearch, Logstash, Kibana) or Fluentd, to aggregate logs from all your microservices and make it easier to identify issues.

- Distributed Tracing: Use distributed tracing tools like Jaeger or Zipkin to track requests as they flow through multiple microservices, helping identify bottlenecks and performance issues.

- Alerting and Dashboards: Set up alerting based on specific thresholds (e.g., error rates, response times) and use visualization tools like Grafana to create dashboards for real-time monitoring of your microservices health.

- Health Checks and Probes: Use liveness and readiness probes in Kubernetes to monitor the health of your microservices. These probes can automatically restart pods that are unhealthy or not ready to serve traffic.

Optimizing Microservices Deployments with Kubernetes

Kubernetes has become an essential tool for deploying and managing microservices at scale. By following best practices for containerization, automation, scaling, service discovery, security, and monitoring, DevOps teams can optimize their microservices deployments for performance, reliability, and security. As the complexity of microservices architectures continues to grow, Kubernetes will play a key role in ensuring that these systems are scalable, maintainable, and efficient.

By leveraging the full power of Kubernetes, DevOps teams can streamline their development processes, improve the resilience of their applications, and provide a better overall user experience. Microservices, when deployed correctly with Kubernetes, enable businesses to build flexible, agile, and scalable systems that can easily adapt to changing business needs.